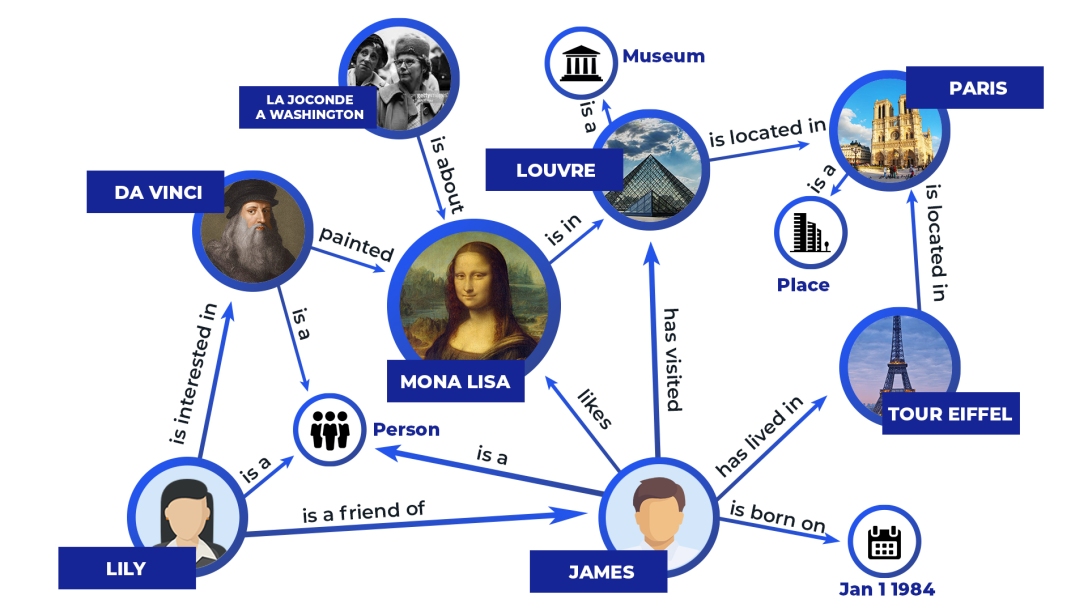

Question answering is a very popular natural language understanding task. It has applications in a wide variety of fields such as dialog interfaces, chatbots, and various information retrieval systems. Answering questions using knowledge graphs adds a new dimension to these fields. “Question answering over knowledge graphs (KGQA) aims to provide the users with an interface… Continue reading Introduction to Question Answering over Knowledge Graphs

Category: Deep Learning

BERT Explained – A list of Frequently Asked Questions

What is BERT? BERT is a deep learning model that has given state-of-the-art results on a wide variety of natural language processing tasks. It stands for Bidirectional Encoder Representations for Transformers. It has been pre-trained on Wikipedia and BooksCorpus and requires task-specific fine-tuning. What is the model architecture of BERT? BERT is a multi-layer bidirectional… Continue reading BERT Explained – A list of Frequently Asked Questions

How can Unsupervised Neural Machine Translation Work?

Neural Machine Translation has arguably reached human-level performance. But, effective training of these systems is strongly dependent on the availability of a large amount of parallel text. Because of which supervised techniques have not been so successful in low resource language pairs. Unsupervised Machine Translation requires only monolingual corpora and is a viable alternative in… Continue reading How can Unsupervised Neural Machine Translation Work?